This is part of a series on machine intelligence companies. We’ve interviewed Beagle and Mariana, and now we’re featuring Beyond Verbal, which is applying AI and machine learning to emotions.

Computers are now better than ever at figuring out what we’re saying to them. They can answer complex questions, assist with everyday tasks, and tap into many of the apps we use so often.

But, they don’t know why we’re making those requests.

Are we asking Siri to play music because we’re happy or sad? If it knew, it would be able to select the right soundtrack for the moment. Should Cortana really send that text now, or would it be better to wait for emotions to run lower?

Beyond Verbal, an Israeli company, teaches computers and devices how to answer those questions.

Many companies are working on artificial intelligence, but Beyond Verbal is focused on emotional intelligence. This is a difficult task made even harder by the fact that people don’t express their emotions in the same way.

We spoke with Bianca Meger, Beyond Verbal’s Director of Marketing, about how the company is using artificial intelligence and machine learning in its efforts to finally teach computers how we humans feel.

[Editor’s note: The interview questions and responses below have been edited for clarity and length.]

What is your role at Beyond Verbal and what does that entail?

I am the director of marketing. In any startup environment, though, you have a specific job title but end up doing so many different things.

My job is to create awareness and understanding of our innovative and truly unique technology, whether it’s through PR or marketing campaigns. In short, my job is to make the user-touchpoints really accessible and interactive so people can understand the product intuitively and easily.

Another duty of mine is to interact with potential and existing customers. It’s really a sales/strategic account hat that I wear in addition to the marketing duties themselves.

From an employee perspective, I think the biggest advantage of our technology is that it’s so versatile, so you never get bored. Every single day you get a new trial or application that opens up new possibilities. It’s extremely exciting.

What did you do before joining Beyond Verbal?

I’m actually a rare case, if I can put it like that. I’ve had a lot of experience in the past with marketing and business development, but not in the high-tech vertical. Instead I come from a real estate development background.

Our CEO, when he received my resume, kind of took a gamble on me because I didn’t have the high-tech lingo and experience. But at the end of the day, the core principles of marketing are the same — people have a need that you have to fulfill.

Of course, jumping from shopping malls to high tech is a very different job, even though now I am dealing mainly with neuroscience, as a core of our technology, whereas in my previous positions I was implementing a lot of neuro-marketing and science in improving shoppers’ experience within our malls.

The transition from implementation — using these tools — to developing new technologies that can help people was a very attractive challenge for me.

What’s the most difficult aspect of your role?

The technology is extremely innovative. It’s really out there. I think our job is difficult because we have to make it really easy and simple for people to use.

How do you collect a voice and run it through the engine for analysis? Analyzing voice files through our API is an extremely simple process. You stream live audio or a pre-recorded file through the API and then receive an analysis practically in real-time.

We essentially turn any device with a microphone and Internet connection into an emotional sensor.

From a business perspective, we don’t have the problem of “where will we add the most value?” Rather, we add value in so many different verticals that the question becomes “where can we make the biggest difference?”

Sometimes it’s difficult to stay focused when you have new use cases popping up, which was one of the main reasons we decided to launch our cloud-based API. This way, developers could benefit from integrating our technology into their solutions, while we stay focused on executing the company’s vision.

What is emotional intelligence? How is it quantified and analyzed?

Today if you ask Siri how you’re feeling, she won’t be able to tell you. But by integrating emotional intelligence analytics, you could ask Siri how you’re feeling, and she could give you a proper response.

If you seem depressed, Siri could help you navigate to an ice cream parlor. Or if frustrated, she could schedule a call with someone you need to talk to.

We essentially add a new, additional set of emotional data to machines, which enables them to really understand how we feel. After all, it’s not just what we say, but how we say it.

Our vision is to allow machines to understand us as humans on an emotional level. Combining emotions and content together unlocks a very strong synergy.

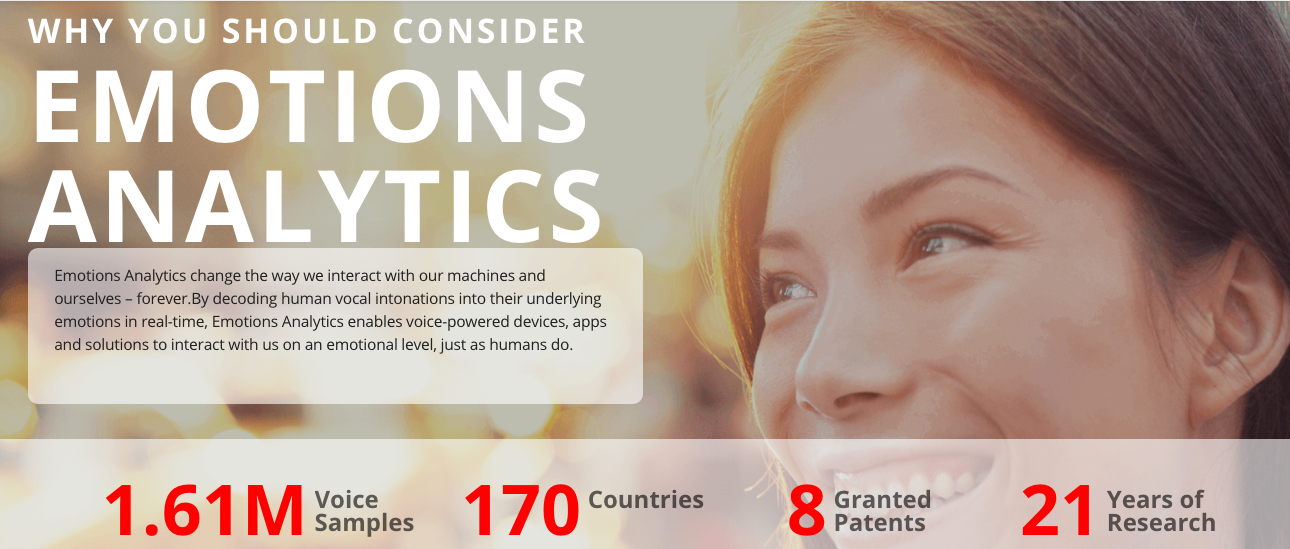

I think what makes Beyond Verbal so unique is the fact that we started this journey 21 years ago, when Dr. Yoram Levanon looked at babies and realized that they could understand if you were speaking to them in a friendly or angry way, even if they don’t understand the content.

Our technology is based on a lot of research that Dr. Levanon has done over the years. He’s had endless experience with researching the field of voice-driven emotions analytics.

Now with the development of artificial intelligence and machine learning, we are able to add his experience and our pre-labeled data set into the system and get patterns that we didn’t have before. Our “magic sauce” is that the input we give to machine learning is of really high quality, and this significantly improves our accuracy.

What are the benefits of analyzing someone’s emotional state?

Our intention at Beyond Verbal is really for people to understand themselves better.

We as humans are pretty apt at understanding how other people come across but don’t always understand how we come across. So for us, this is a product that can help you understand yourself better and thereby improve your life.

What we’ve realized over the past two years is that emotions over time have a direct consequence on our emotional well-being. When you are lonely all the time, for example, it can lead to depression. So this can be a really valid way of understanding how our emotions affect our emotional well-being.

Imagine if I (as a mother) had a way of analyzing my children’s emotions throughout the day and getting notifications when something was wrong. Then when I came home from work, I would know to be more sensitive to my daughter when she had a bad day or ask what happened when I received a notification that she’s sad.

In today’s hectic world, we’re so busy that we don’t always pick up on the little emotional clues or signs that we or our loved ones leave. At Beyond Verbal, we want to provide a tool for people to track emotions in a passive, non-intrusive, and continuous way.

In different verticals, we have different APIs and objectives for our customers. Call centers have a different need than market researchers, for example. But in the long run, the vision of Beyond Verbal is to see where we can make a difference in the health sector.

Currently we are doing a research project with Mayo Clinic in the US. We can potentially detect if someone might be at risk for a heart attack from their voice.

So far the initial research is very positive. We plan to show this research in the next six months to a year. The potential is astounding — the voice might be able to tell us more than just the emotions or well-being of the speaker but also their physical health.

On a technical level, what’s the hardest aspect of emotions analytics?

I think the hardest part would be the lack of science behind emotions.

Emotions are very intuitive in many ways. When someone says something to me, I can interpret the intonation completely differently from the way you interpret it. (I think they said it in an angry voice, you think they said it sarcastically, and neither of our interpretations are inherently wrong — or right.)

There’s no regulated science pertaining to emotions. So to get that first, initial step to science that actually makes sense was the hardest part.

Today, however, the next milestones we face are to reduce the time it takes to send back an analysis and also to separate two different speakers. One of our objectives now is to split up a conversation, so the engine can understand which voice belongs to whom and analyze each person’s emotions simultaneously.

What can we expect from Beyond Verbal in the future?

We’ve recently launched our cloud-based API, streamlining and automating the integration of our software into any device or application. This enables users to register directly online, try our technology firsthand, and integrate it into their own solutions.

Our close-term goal is to integrate voice-driven emotions analytics into as many applications as possible — adding value with emotions understanding.

Based on our research with Mayo Clinic, our main focus is on m-Health. In the coming weeks, we will be launching an m-Health research platform transforming vocal intonations into measurable biomarkers.

We’ll be able to do research on ailments ranging from PTSD to depression to Alzheimer’s, and many more. This will create a whole environment where we can add an additional layer of emotions, and potentially even health, to people’s existing studies.

What’s the most exciting trend in machine learning from Beyond Verbal’s perspective?

At a high level, one of the ways to look at machine learning algorithms is to distinguish between “structured” and “unstructured” (or “supervised” vs. “unsupervised”) methodologies.

This refers to whether to let the machine learning algorithms look for specific conditions or to let the machine learning algorithm find the specific insights automatically. Both are very exciting methodologies.

What advances in machine learning have benefitted Beyond Verbal the most?

Our unique position of leveraging the more than 21 years of research we’ve been doing in this space manifests itself best when we’re using the supervised methodologies of machine learning.

This process allows us to use some of the unique, patented features and capabilities we’ve discovered over the years to achieve fascinating scientific breakthroughs.

Another key ingredient to succeed with machine learning is to have correctly-labeled big data. At Beyond Verbal, we have developed mechanisms for effective data collection and labelling as input to our AI system and are using a growing database of over 2 million voice samples for that.

Are there any limitations on machine learning Beyond Verbal would like to see removed?

One of the biggest challenges in machine learning is overfitting.

This is a problem when the AI machine becomes too complex that it simply “remembers” a particular input set and refuses to “think” in new directions in order to recognize any new samples.

There are different ways to overcome this challenge, but there isn’t yet a clearcut solution that allows you to build machines that “learn” to “think” like a human brain.

One of the ways to address this is using the labeled big data (mentioned above), which is where Beyond Verbal’s experience comes into play.

Does Beyond Verbal consider itself a machine learning company?

At Beyond Verbal, we don’t see ourselves as a machine learning company.

For us, machine learning is only a toolset to achieve some goal, one of many variety of tools we are using. There are other tools that were developed by us in the pre-machine learning era, which we continue to use.

How serious a threat do you consider machine learning initiatives from large companies like Apple and Google?

I don’t think it’s at all on a competing type of level. We believe what we’re doing is complementary to artificial intelligence because it gives us additional emotional data sets that can be added to the AI and new features of it.

We can be included in this new trend with artificial intelligence, and that’s definitely helped us in a lot of ways, and our technology can also help those companies.

This post is part of a series of interviews with machine intelligence companies that are harnessing the power of machine learning and artificial intelligence in innovative ways. Stay tuned for more!